My previous article “Inotify in Containers” has demonstrated that when ConfigMap is mounted as directories, any changes in the ConfigMap will propagate to related pods, and can be detected with inotify-like APIs.

A follow-up question might be: what should a well-behaved application react to this trigger accordingly? What if it’s a ill-designed application?

To clarify this I’ve conducted a series of experiments for 3 possible configmap-reloading strategies:

- Built-in auto-reloading apps

- External signals

- Pod rollout

In this article I’m going to explain the experiments and preliminary findings. All experiment materials are available in the configmap-auto-reload repo.

Built-in auto-reloading apps

Some applications (e.g., Traefik) are smart enough to gracefully reload themselves whenever they detect any configuration changes without downtime. Will this work with Kubernetes ConfigMap?

See the traefik-example demo:

Perfect! Traefik auto-reloads itself as long as you correctly mount the traefik-config ConfigMap as /etc/traefik/ directory for the pod. Here’s the related code snippet:

[file]

watch = true

directory = "/etc/traefik/"External signals

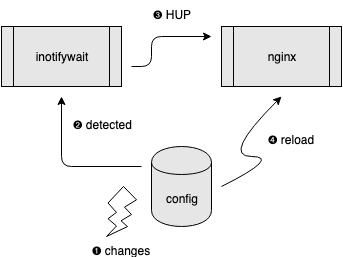

Some applications can reload configurations; but not auto-reload. Instead, they reload their configurations when they are told to do so. For example, when Nginx receives a HUP signal (nginx -s reload) 1, and when Apache HTTP Server receives a HUP signal (apache -k restart) or USR1 signal (apache -k graceful) 2, they will reload new configurations without downtime.

Who should be the HUP signal sender in Kubernetes?

Before Docker and Kubernetes rule the world, there were plenty of such tools, e.g., inotify-tools and Chokidar cli. People used them to watch for changes in specified directories and to invoke dedicated actions accordingly (including sending signals, of course).

Will this combo trick work with Kubernetes ConfigMap?

See the inotifywait-example demo:

Good! Inotifywait detects the changes and sends HUP signals to Nginx as long as you correctly mount the nginx-config ConfigMap as /etc/nginx/ directory for the pod. Here’s the related code snippet:

if [[ "$(inotifywatch -e modify,create,delete,move -t 15 /etc/nginx/ 2>&1)" =~ filename ]]; then

echo "Try to verify updated nginx config..."

nginx -t

if [ $? -ne 0 ]; then

echo "ERROR: New configuration is invalid!!"

else

echo "Reloading nginx with new config..."

nginx -s reload

fi

fi;DISCLAIMER: it’s just for demo; not a robust implementation. For more examples, see 345.

CAUTION: it is against the best practice of “one process per container” policy.6 If you really want to use this combo trick, try to model it as “multiple containers within a single pod.”

Pod rollout

Some applications do not have any configuration reloading mechanism. What should we do? Maybe the only reasonable way is to rollout their running instances, and just spawn new ones with the new configurations.

Reloader is a generic solution for Kubernetes. With the help of it, pods can be restarted whenever related ConfigMap has changed.

See the reloader-example:

Perfect! Nginx pods get rolling updated by Reloader as long as you annotate the Nginx deployment with configmap.reloader.stakater.com/reload. Here’s the related code snippet:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

annotations:

configmap.reloader.stakater.com/reload: "nginx-config"

...Conclusion

If your application is smart enough to gracefully reload itself whenever it detects any configuration changes, it continues working well with ConfigMap in Kubernetes.

If not so smart, an easier approach is to use automatic tools (e.g., Reloader) to rolling update related pods.

I will not recommend the watch+signal approach (e.g., inotify-tools). It is prone to error and zombie processes.

-

Nginx official document: Controlling nginx ↩︎

-

Apache official document: Stopping and Restarting Apache HTTP Server ↩︎

-

An implementation worth studying: https://github.com/rosskukulinski/nginx-kubernetes-reload ↩︎

-

Q&A in Stack Overflow: Nginx Reload Configuration Best Practice ↩︎