Non-stop storage scaling (vertical or horizontal scaling) is essential in a data-intensive system, database servers in particular.

Is it possible in Kubernetes?

In Kubernetes v1.11 the persistent volume expansion feature is being promoted to beta and enabled by default.1 There is also a nontrivial real-world use case for this: Strimzi Kafka operator. Strimzi merely builds on top of existing Kubernetes storage class mechanism to grow the storage of Kafka cluster.2 Therefore, the volume resizing feature is not exclusively available for Strimzi and Kafka. You can put this feature into your own application as long as you learn the mechanism.

To get a concrete knowledge of the volume resizing, I’ve conducted a simple experiment on GCP and GKE. The experiment tries to answer the following questions:

- Does volume resizing really work in Kubernetes?

- Is the resizing process non-stop?

- Are data still persistent after resizing?

All experiment materials are available in the vol-resize repo, for your convenience.

About the sample app

A sample app voltest.sh will be used for the whole experiment.3 It displays the size of available disk spaces in the specified mount point (as the 1st argument), and also records the data continuously in the specified output file (as the 2nd argument; default to "data").

Let’s see how it works. Assume that we are in the /home directory, with available disk space about 4.5 GB:

% pwd

/home

%

% df -h

Filesystem Size Used Avail Use% Mounted on

overlay 41G 32G 8.2G 80% /

tmpfs 64M 0 64M 0% /dev

tmpfs 848M 0 848M 0% /sys/fs/cgroup

/dev/sda1 41G 32G 8.2G 80% /root

/dev/sdb1 4.8G 38M 4.5G 1% /home

overlayfs 1.0M 160K 864K 16% /etc/ssh/keys

shm 64M 0 64M 0% /dev/shm

overlayfs 1.0M 160K 864K 16% /etc/ssh/ssh_host_dsa_key

tmpfs 848M 736K 847M 1% /run/metrics

tmpfs 848M 0 848M 0% /run/google/devshellAt first run, it shows the space of current directory and records them continuously in the data file within the same directory:

% docker run -it -v $(pwd):/mnt williamyeh/voltest /mnt

---> Checking /mnt/data

1 : 4.5G

2 : 4.5G

3 : 4.5G

4 : 4.5G

5 : 4.5G

6 : 4.5G

7 : 4.5G

8 : 4.5G

9 : 4.5G

10 : 4.5G

11 : 4.5G

12 : 4.5G

^C

%Interrupt the execution at 12 on purpose, and run again. You’ll see that it picks up the last serial number 12 and continues counting:

% docker run -it -v $(pwd):/mnt williamyeh/voltest /mnt

---> Checking /mnt/data

13 : 4.5G

14 : 4.5G

15 : 4.5G

16 : 4.5G

17 : 4.5G

18 : 4.5G

19 : 4.5G

20 : 4.5G

21 : 4.5G

22 : 4.5G

23 : 4.5G

^C

%Now the data file should have 23 lines of records:

% cat data

1 : 4.5G

2 : 4.5G

3 : 4.5G

4 : 4.5G

5 : 4.5G

6 : 4.5G

7 : 4.5G

8 : 4.5G

9 : 4.5G

10 : 4.5G

11 : 4.5G

12 : 4.5G

13 : 4.5G

14 : 4.5G

15 : 4.5G

16 : 4.5G

17 : 4.5G

18 : 4.5G

19 : 4.5G

20 : 4.5G

21 : 4.5G

22 : 4.5G

23 : 4.5G

%In the following experiment we’ll use the app and the data file to answer the 3 questions:

-

Does volume resizing really work in Kubernetes? Just inspect the disk spaces it displays.

-

Is the resizing process non-stop? Just inspect the screen output.

-

Are data still persistent after resizing? Just inspect the content of data file.

Ready?

Experiment part 1: initial size

The experiment is conducted on GCP and GKE. However, it should apply to other cloud Kubernetes platforms as well, with minor modification.

➊ Clone the experiment repo to your workspace or Cloud Shell:

% git clone https://github.com/William-Yeh/vol-resize.git➋ Prepare a Kubernetes cluster in GKE.

➌ Create a 20GB persistent disk named voltest. For example, the following command will create such a persistent disk in the us-central1-a zone:

% gcloud compute disks create \

--size=20GB --zone=us-central1-a \

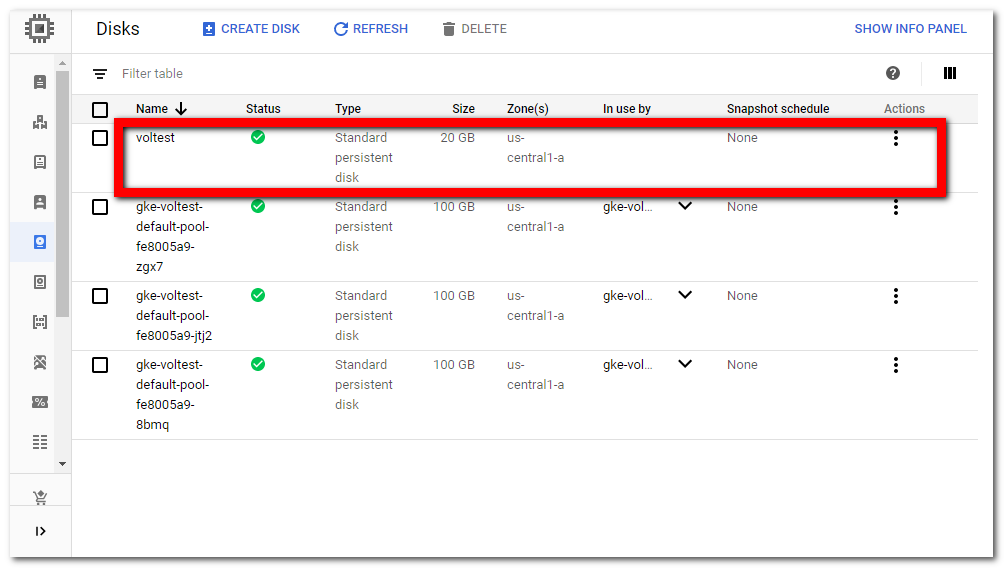

voltestCheck if the underlying persistent disk is created:

➍ We need a storage class with allowVolumeExpansion enabled. The manifest file expansion-ss.yml is provided as follows:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: expansion

parameters:

type: pd-standard

provisioner: kubernetes.io/gce-pd

allowVolumeExpansion: true

reclaimPolicy: DeleteCreate a storage class expansion for this:

% kubectl apply -f expansion-ss.yml➎ We need a PV manifest file voltest-pv.yml to associate it with the existing persistent disk just created before:

apiVersion: v1

kind: PersistentVolume

metadata:

name: voltest

spec:

storageClassName: "expansion"

accessModes:

- ReadWriteOnce

capacity:

storage: 20Gi

gcePersistentDisk:

pdName: voltest

fsType: ext4Create the persistent volume voltest now:

% kubectl apply -f voltest-pv.yml

persistentvolume/voltest created

% kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

voltest 20Gi RWO Retain Available expansion 8sNote that the voltest PV is in “Available” status.

➏ We need a PVC manifest file voltest-pvc.yml to claim the PV:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: voltest

spec:

volumeName: voltest

storageClassName: "expansion"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20GiClaim the persistent volume now:

% kubectl apply -f voltest-pvc.yml

persistentvolumeclaim/voltest created

% kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

voltest Bound voltest 20Gi RWO expansion 11s

% kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

voltest 20Gi RWO Retain Bound default/voltest expansion 13mNote that the voltest PV/PVC pair is in “Bound” status.

➐ We need a manifest file voltest-app.yml for our sample app to access the PV/PVC:

apiVersion: apps/v1

kind: Deployment

...

spec:

replicas: 1

...

template: # pod definition

...

spec:

containers:

- name: voltest

image: williamyeh/voltest

volumeMounts:

- mountPath: "/mnt"

name: voltest

volumes:

- name: voltest

persistentVolumeClaim:

claimName: voltestInvoke the sample app now:

% kubectl apply -f voltest-app.ymlOpen another terminal pane to watch the logs continuously:

% kubectl logs -f deployment/voltest

---> Checking /mnt/data

1 : 20G

2 : 20G

3 : 20G

4 : 20G

5 : 20G

6 : 20G

7 : 20G

8 : 20G

9 : 20G

10 : 20G

11 : 20G

12 : 20G

...Quite familiar output. Everything works fine.

Keep the logs running. We’ll see it again and again.

Experiment part 2: resizing

Now we’re about to resize the volume.

➊ Edit the voltest PVC:

% kubectl edit pvc/voltest➋ Change the value of spec.resources.requests.storage from 20Gi to 100Gi. Save, and exit.

➌ Check if the logs are still being generated, and the content of the logs.

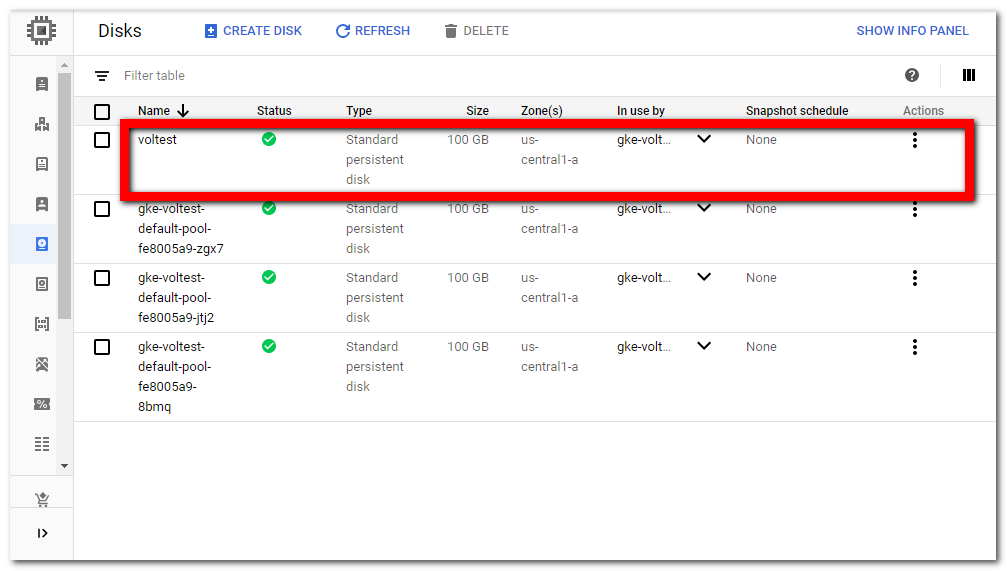

➍ Check the size of underlying persistent disk. It should be expanded to 100 GB now.

➎ Check if the PV/PVC are both expanded:

% kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

voltest 100Gi RWO Retain Bound default/voltest expansion 45m

% kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

voltest Bound voltest 20Gi RWO expansion 32mPV is expanded to 100 GB, but PVC not yet. In other words, block storage volume is expanded, but file system is not yet. The reason is described in the “Resizing Persistent Volumes using Kubernetes” article:

-

“Once underlying volume has been expanded by the storage provider, then the PersistentVolume object will reflect the updated size and the PVC will have the

FileSystemResizePendingcondition.” -

“File system expansion must be triggered by terminating the pod using the volume […] then pod that uses the PVC can be restarted to finish file system resizing on the node.”

For now the question “2. Is the resizing process non-stop?” should be obviously answered. Let’s move on to handle the FileSystemResizePending condition by restarting the related pods.

Experiment part 3: restart the pod

➊ Kill the pod, and let deployment restart a new pod for us (replicas=1):

% kubectl get pods

NAME READY STATUS RESTARTS AGE

voltest-d88ff8c49-66wk2 1/1 Running 0 23m

% kubectl delete pod voltest-d88ff8c49-66wk2➋ Watch the logs! Our sample app will see the file system expansion progress on the fly:

13465 : 20G

13466 : 20G

13467 : 20G

13468 : 24G

13469 : 30G

13470 : 30G

13471 : 30G

13472 : 30G

13473 : 30G

13474 : 42G

13475 : 77G

13476 : 99G

13477 : 99G

13478 : 99G

13479 : 99G

13480 : 99G

13481 : 99G

13482 : 99G➌ Check if the PV/PVC are both expanded:

% kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

voltest 100Gi RWO Retain Bound default/voltest expansion 57m

% kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

voltest Bound voltest 100Gi RWO expansion 44mFor now the remaining questions “1. Does volume resizing really work in Kubernetes?” and “3. Are data still persistent after resizing?” should be obviously answered.

Conclusion

As can be seen in this experiment, all you have to do to grow the PV/PVC are:

Preparation

-

Use Kubernetes ≥ 1.11.

-

Set

allowVolumeExpansion: truefor your storage class, and choose an appropriate underlying provisioner (storage provider). -

Use the storage class in your PV and PVC.

Expansion

-

Increase the PVC

spec.resources.requests.storagevalue. -

Restart the related pods.

Kubernetes 1.11 also introduces an alpha feature called online file system expansion. You can track its progress in the Kubernetes CSI Developer Documentation “Volume Expansion”.

-

You may use the volume resizing feature since Kubernetes 1.11. Read the article published by kubernetes.io in more detail: “Resizing Persistent Volumes using Kubernetes”. ↩︎

-

To know more about how Strimzi implements the volume resizing for Kafka, read these articles: “Persistent storage improvements” and “Resizing persistent volumes with Strimzi”. ↩︎

-

The Docker image for this voltest.sh app is available in

williamyeh/voltestfor your convenience. ↩︎